From paper trails to digital paths: Rippling’s journey to the Documents Engine

![[Blog Hero] Automation Laptop](http://images.ctfassets.net/k0itp0ir7ty4/k3jg6n8pvX4cRXNnyXb7i/330c40bef2a88942bb3e6ffe904c1a70/automation_laptop_-_Spot.jpg)

In this article

One of Rippling’s core products is payroll, enabling companies to pay employees and contractors, both locally and globally, in a single unified pay run. Alongside payments, we handle all the tax filings and payments required by government agencies on behalf of employers and employees.

That’s easier said than done. Each agency has its own submission format, communication method, and compliance rules; some accept electronic filings, others still rely on fax, and file requirements can differ by regulation.

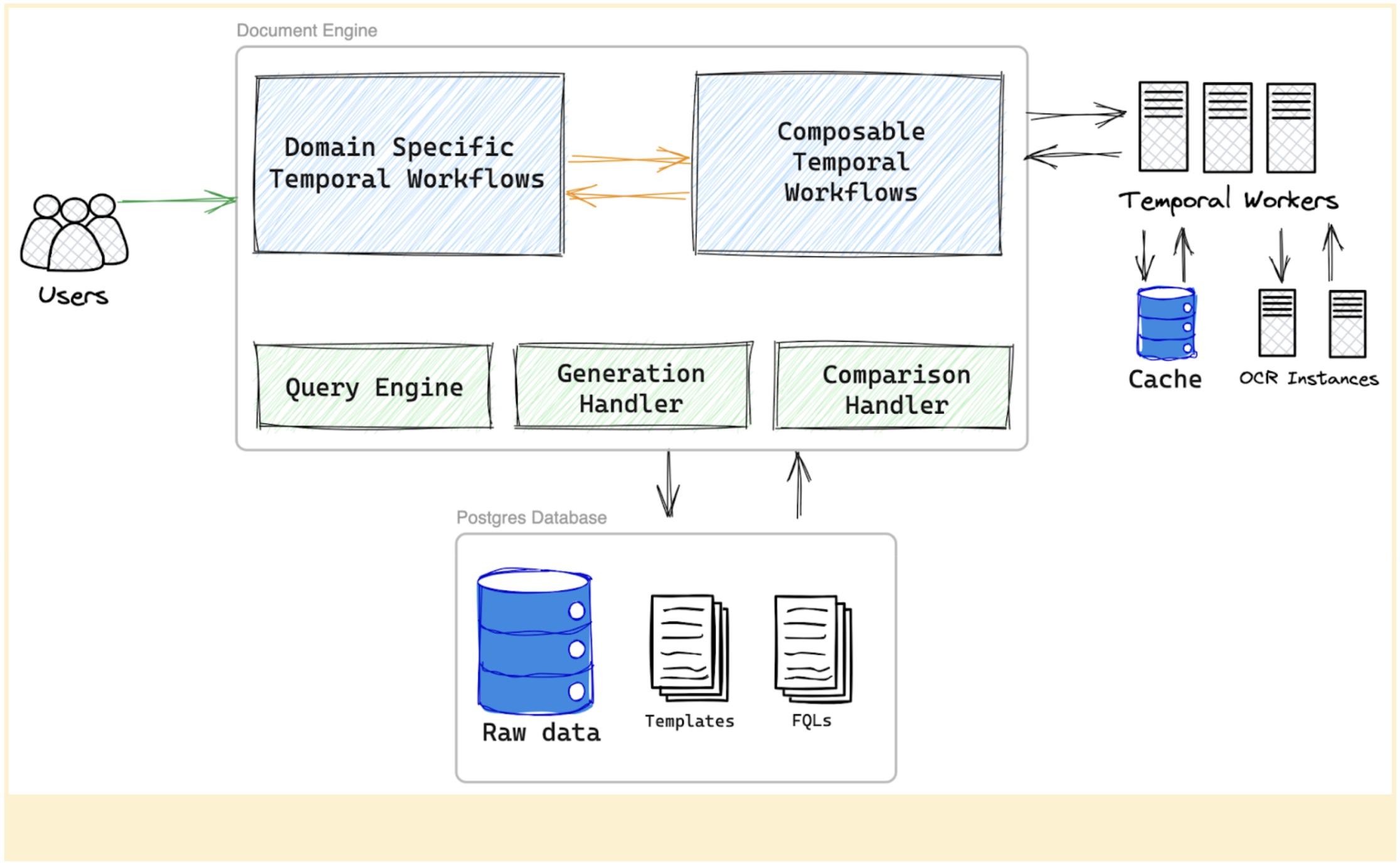

Rippling runs this process on an internal system called the Filings Engine. In this post, we’ll take a closer look at one of its core components: the Document Engine, which generates over 10 million documents every week for more than 1,000 agencies, ensuring every submission is accurate and compliant with agency requirements.

Breaking down requirements

“Filings” refer to the documents Rippling submits to government agencies on behalf of customers, typically to report payroll taxes, benefits, or other compliance data. These documents usually include key financial, operational, and regulatory details.

For most Rippling customers, the majority of filings relate to taxes. Each filing requires submitting detailed documents to the appropriate tax authority, outlining the amounts withheld from both employers and employees. These submissions happen asynchronously — on a weekly, monthly, quarterly, or annual basis — depending on the agency. Every tax authority defines its own document structure and formatting rules, and even minor deviations can result in rejected filings. Fortunately, these standards are generally stable over time, so our system can optimize for reliability rather than constantly adapting to complex and dynamic file formats.

Documents can be broadly classified based on their file formats:

XML: These documents adhere to a predefined XML Schema Document (XSD) that the respective agency provides, such as those specified by the Federal/State Employment Taxes (FSET) program.

Fixed-width text files: Data in fixed-width text files is arranged in rows and columns, with each entry occupying a single row. Columns have predetermined widths specified in characters, dictating the maximum data capacity. Instead of delimiters, spaces are used to pad smaller data quantities, ensuring that the start of each column can be precisely located from the beginning of a line.

PDF: Documents presented in human-readable, fillable forms.

CSV/TSV: Delimited text files, which are comma- or tab-separated, and structured according to the agency’s specifications.

At a high level, these formats can be grouped into two categories: computer-friendly (XML, CSV, and fixed-width text files), and paper-friendly (PDF). Most generated documents share common elements, while PDF files typically require additional handling due to their interactive and layout-dependent nature.

Journey of a document

Let’s consider how a new document is introduced into the system and explore the various stages it undergoes, from creation to submission:

End users receive a new requirement: The process begins when system end users, such as the compliance team, receive a new request to create a document for a regulatory body, for instance, Acme. Acme is already registered in the Filings Engine as an entity, along with all relevant details.

Agency issues new regulations: Acme has updated requirements specifying that the form must be submitted in PDF format, signed, and physically printed before delivery.

Create or adopt the form: The compliance team can either use the PDF form provided by Acme or generate a new PDF that includes the necessary form fields to meet the agency’s requirements.

Upload the blank form: Once finalized, the blank PDF is uploaded to the Document Engine, where it becomes available for further configuration.

Configure the form and set an effective date: The form is then configured using FQL (Filings Query Language) expressions, which determine how data populates each field. Each version of a document is assigned an effective date, defining when that version should be used for submission. Maintaining versioning by effective date allows the system to reproduce documents as they existed for any filing period, ensuring bi-temporality and accurate historical reconstruction.

Generate and validate the document: The document is generated based on the specific context of the relevant customer or employee. It then passes through an audit phase, where automated assertions validate its accuracy.

Approve and submit: Once approved, the document moves to the next service in the Filings Engine, which handles the actual submission, either through electronic transmission or physical methods like printing and faxing.

In short, the goal of the Document Engine is to ensure each generated file — whether it’s an agency filing, customer notice, or power of attorney — is configured and displayed correctly, before submission.

Modeling data to document

The system initiates the data preparation process according to the agency template. For example, suppose that agency ABC requires employee data in the following CSV format:

All wage and tax calculations are completed before document generation and already stored in a Postgres database. Postgres, our relational database of choice, uses a fixed schema, which means that the source data is organized in predefined structures such as company, employee, and tax tables.

Modeling data directly to match every agency’s document format, however, quickly becomes complex. Each agency defines its own schema, and these requirements can evolve with new clauses or form revisions. Continuously modifying the schema to mirror every change would be error-prone and impossible to scale.

To solve this, we established a clear distinction between the data layer and the render layer from the outset. While the raw data remains stored in the database conventionally, we use FQL to populate documents. FQL is tailored to comprehend the filing domain intricacies.

Templates within the system are generated by the compliance team using FQL expressions. Returning to the previous CSV example, the document could be configured in FQL as follows:

1

2

ssn, name, total wage, total tax, Q1 tax, Q2 tax, Q3 tax, Q4 tax

employee.ssn, employee.name, employee.wages.total, (employee.wages.q1 + employee.wages.q2 + employee.wages.q3 + employee.wages.q4), employee.wages.q1, employee.wages.q2, employee.wages.q3, employee.wages.q4FQL offers the following functionalities:

Arithmetic operations: Basic math operators such as addition (+), subtraction (-), multiplication (*), and division (/)

Functional transformations: Common helpers like trimming trim(), mapping map(), rounding down floor(), looping over lists, and more

Domain-specific variables: Custom objects like client.employee[0].address that map directly to filing data structures

This approach enables the source data to maintain a consistent schema while allowing FQL to render and adjust documents dynamically as requirements change. Each document template is versioned using bi-temporality, which allows Rippling to reproduce any document exactly as it appeared at a given point in time, even as FQL expressions or form structures evolve. For example, if a new formula is later added to calculate total work hours or a new field is introduced, the system can still regenerate documents with complete accuracy.

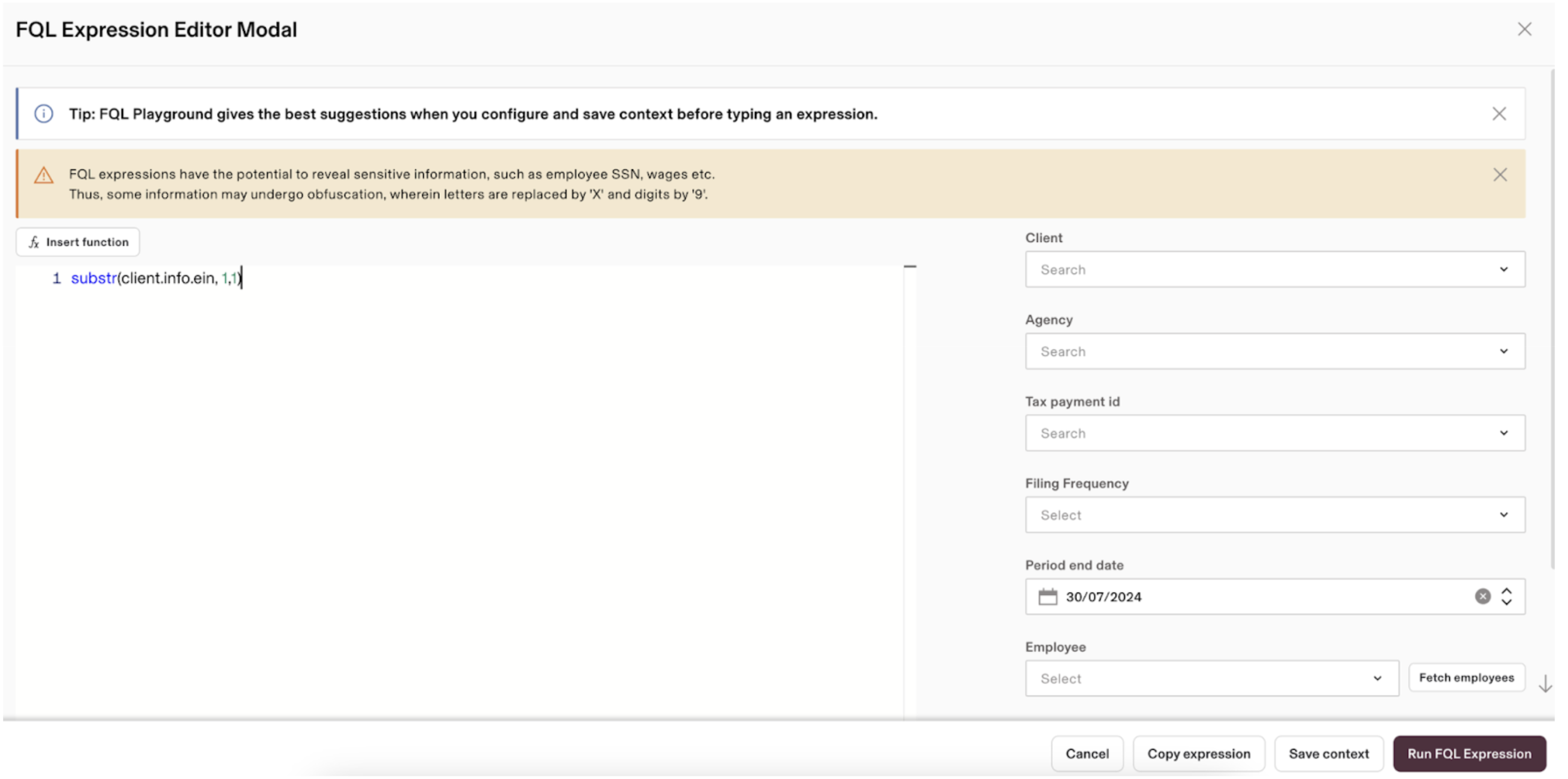

To make FQL accessible to non-technical users, we built an FQL playground for experimenting with expressions against staging data. A specific FQL context is passed for the agency/client/document to maintain expression consistency when generating documents. For instance:

1

2

evaluator := fql.NewEvaluator(temporal_effective_at_date, agencyID, clientID)

result, err := evaluator.Eval("client.info.address")In this example, evaluating the expression client.info.address links the client variable to the corresponding clientID in the database, resolves the nested variable info, and returns the client’s address. FQL variables dictate how to transform the database's relational model into FQL expression results.

Prioritizing usability for operations

As mentioned earlier, every agency establishes a specific file format, and this format or the template itself may change over time. Therefore, it is crucial to ensure that all data is accurately entered following the guidelines outlined in the documents. This means filling the correct fields in PDFs, maintaining proper column alignment for CSV or TSV documents, and adhering to the structural rules for formats like FSET.

The operations team manages this process through a user-friendly configuration interface. They start by analyzing tax agency guidelines, mastering every legislative update and change, and then translating complex legislative requirements into functional logic. Using a user-friendly query language, they encode these rules directly into the platform, ensuring every document reflects the most recent regulations.

Once configured, each output is rigorously validated. The team reviews both the calculated results and the final filing artifacts, verifying their accuracy before submitting them to the relevant tax agency. This combination of deep tax expertise and technical skill ensures the software is a reliable tool for every user.

Let’s look at how this configuration process works for setting up PDFs.

Configuring PDFs

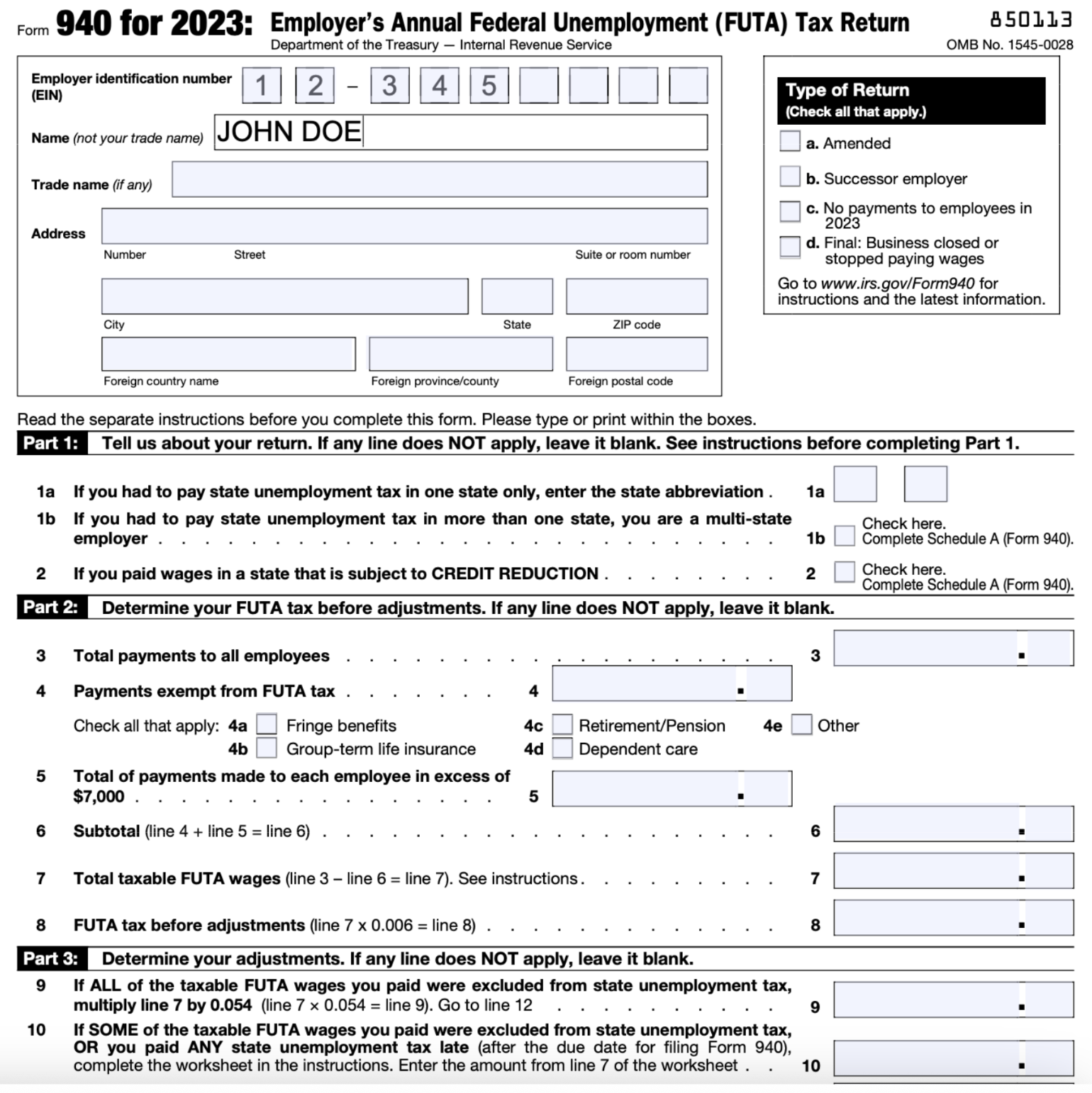

Agencies typically provide PDF templates that include pre-defined fillable form fields. These files can often be downloaded directly from the agency’s website and follow the same structure used in standard PDF readers.

IRS 940 PDF TEMPLATE

To make the IRS 940 form usable across multiple clients and employees, we need to:

Identify which fields are editable in the PDF.

Identify what data needs to be resolved and printed on the PDF.

Identify the location of the fields in the PDF.

Identify which fields are editable

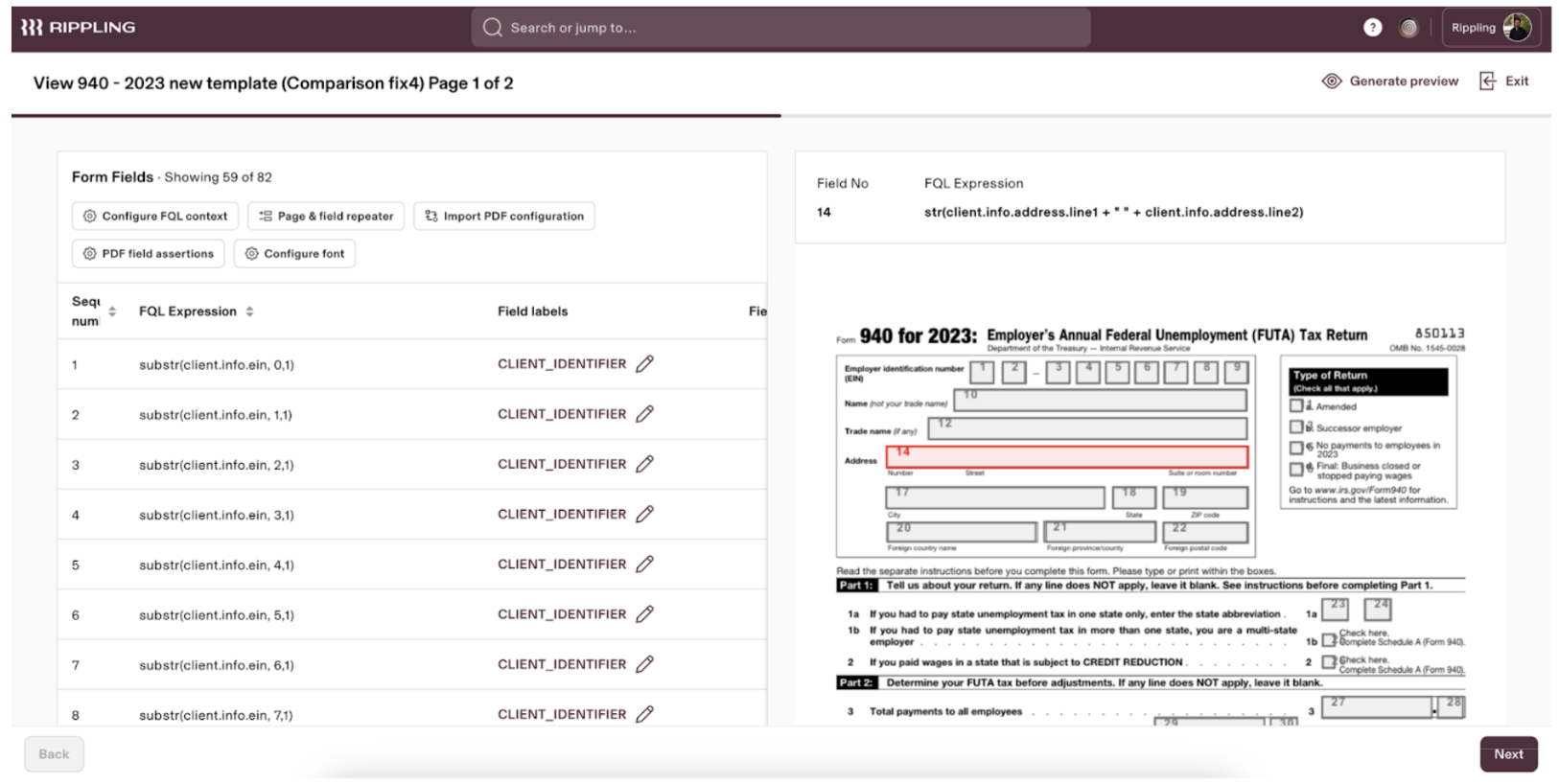

Each fillable PDF provided by the agency comes with editable fields, allowing them to be filled out using a browser or tools like Acrobat. Once these PDFs are uploaded to our system, we detect the fillable fields and generate metadata, including field coordinates, editability status, field-level validations, and more. We utilize this metadata to render the PDFs in the UI, showing users the location of each field and the type of data expected.

Identify what data needs to be resolved and printed on the PDF

With the fields identified in the PDF, we understand what data belongs in each box. Next, we need to dynamically populate client or employee data into the specific fields within the PDF. This is accomplished using FQL expressions. As explained earlier, FQL expressions help query, evaluate, and resolve the necessary data for each required attribute in the PDF.

Below is a screenshot of the FQL Playground, our in-house tool for testing and constructing FQL expressions.

Once we have FQL expressions associated with every field, the query engine can then resolve the FQL expressions into values and render them on the PDF document.

Identify the location of the fields in the PDF

This step is essential for the Document Engine to determine where to place values in the PDF. Each field in the PDF template has associated metadata that includes its coordinates and dimensions. When the template is uploaded to the system, the Document Engine uses this metadata to locate the fields and accurately print them on the document.

This is just one aspect of configuring documents within the system. Similarly, other file formats can be configured through the user interface. These files can be generated in large quantities, and their audit reports, along with assertions and regression checks, are readily accessible to the compliance team.

Enforcing accuracy along with usability

Our commitment to accuracy is operationalized through a robust governance framework designed for zero-error tolerance. Accuracy is engineered directly into our release pipeline. Our governance model mandates that every logic update is first isolated in a secure sandbox environment where it undergoes rigorous manual validation and automated test suites. We enforce a strict maker-checker protocol, requiring independent peer verification for every single change. No update is promoted to production without clearing a multi-stage change review and approval lifecycle. This entire workflow is underpinned by an immutable audit trail, comprehensive version control, and a bi-temporal data model. This architecture provides complete traceability, enabling us to retroactively query not just what changed, but the exact state of the logic on any given effective date.

What’s next

Ever wonder how millions of documents are generated seamlessly at scale? Our next post in this series will explore the infrastructure and scalability aspects of the Document Engine. Discover how Go's concurrency maximizes single-machine output, while Kubernetes intelligently scales across countless instances. Uncover the magic of Temporal for robust task orchestration and the cost-saving power of AWS Aurora Serverless v2 for dynamic database scaling. Get ready to explore the tech stack that handles massive, bursty document generation with surprising efficiency.

Disclaimer

Rippling and its affiliates do not provide tax, accounting, or legal advice. This material has been prepared for informational purposes only, and is not intended to provide or be relied on for tax, accounting, or legal advice. You should consult your own tax, accounting, and legal advisors before engaging in any related activities or transactions.

Author

Cezar Pokorski

Staff Software Engineer

Software Engineer passionate about elegant solutions, with 2+ years in Golang, 5+ in Python, even more with JS/TS, and a lifelong love for coding (and 8-bits) since his first 10 PRINT"Hello world": GOTO 10.

Explore more

From thousands to millions: Scaling our reporting engine

Discover how Rippling scaled its analytics engine for 10x data growth, enhancing real-time reporting and optimizing large dataset handling.

Inside Rippling’s real-time reporting engine

Rippling's BI tool is better than standalone BI systems. Rippling allows users to merge data across applications into real-time reports to answer the most pressing business questions.

How Rippling builds enterprise-grade APIs

Our enterprise-grade APIs empower customers to integrate with Rippling seamlessly from their own infrastructure, enabling faster implementation with minimal engineering effort.

The code behind Rippling’s no-code workflow automation tools

Explore how Rippling built a resilient workflow engine to power its no-code automation tools, turning manual HR tasks into seamless automated processes.

How a team of only 10 engineers built Rippling’s PEO from scratch

See how 10 engineers tackled complex multi-tenancy challenges to build Rippling's Professional Employer Organization platform from the ground up.

Compliance 360: Building trust at scale

Discover how Rippling’s Compliance 360 product helps businesses scale globally while creating a transparent and trusted work environment for employees worldwide.

Certified Payroll: Everything You Need to Know

Learn everything about certified payroll! Discover its importance, requirements, and consequences of non-compliance.

![[Blog - Hero Image] Coins Slot](http://images.ctfassets.net/k0itp0ir7ty4/5fXF0bdbzJCW2OGLyIgvp1/fc6161df3073d28162032febc98b6e01/Coins_Slot_-_Spot.jpg)

Rippling vs. Gusto: The 2025 definitive comparison for HR and Payroll

Learn how Rippling stands out over Gusto on HR and payroll features, pricing, onboarding and user experience, and more.

See Rippling in action

Increase savings, automate busy work, and make better decisions by managing HR, IT, and Finance in one place.

1 2ssn, name, total wage, total tax, Q1 tax, Q2 tax, Q3 tax, Q4 tax 1xx9, Joe, 20600, 6000, 1500, 1500, 1500, 1500